Your customers aren’t ghosting you. They’re ghosting your surveys.

It’s not that people don’t have opinions (they absolutely do). It’s that filling out a form feels like homework.

Surveys show up at the wrong time, in the wrong format, and demand way too much effort for too little emotional payoff. No wonder response rates keep shrinking, and the people who do answer tend to be the most annoyed, the most loyal, or the most bored… not the most representative.

Meanwhile, AI-powered chat interviews are flipping the script. Because when feedback feels like a conversation, customers actually open up.

In this article, we’ll break down why traditional surveys are losing their power, why chat-based feedback works better, and how AI interviewers can help you go from checkbox answers to real customer truth.

Why Traditional Surveys Don’t Work Anymore

Surveys used to be the main way to understand customers. But today, they often do the opposite; instead of building a connection, they create distance. The problem isn’t that people don’t care; it’s that surveys make it hard to share what they really think.

Here’s why the old approach doesn’t work anymore.

1. People Are Simply Tired of Surveys

Everywhere you go, someone’s asking for feedback, after an order, during a call, inside an app, or through an email. That constant stream trains people to ignore surveys altogether. This is what researchers call survey fatigue, when people feel overexposed to questions and underwhelmed by the experience of answering them.

Response Rates Keep Dropping

Across industries, participation has slipped to around 5–30%, depending on channel and audience. Most people skip surveys because they expect them to be long, repetitive, and unrewarding. It’s not a lack of opinions; it’s self-protection from another form that feels like work.

Tired Answers Hurt Data

Even when people still respond, they often “go through the motions.” They choose random options, repeat the same rating, or write one-word replies. That’s known as satisficing, doing the minimum to finish quickly.

The result: numbers that look clean on a dashboard but hide the messy, real reasons behind them.

When surveys start exhausting rather than engaging people,

the insight they produce weakens too.

2. Too Much Thinking, Not Enough Talking

This fatigue connects directly to another issue, mental overload. When you ask customers to fill out a survey form, you’re asking them to compress everything they feel, remember, and experience into a list of static questions. But humans don’t think in clean rows of checkboxes or 1-10 scales; they think in stories, full of context, emotion, contradictions, and subtle detail.

Forms miss the “why”

Every customer’s story is different.

- A first-time buyer worries about clarity.

- A loyal user wants speed.

- A churn risk feels emotionally disconnected.

Yet surveys treat them all the same. Generic questions miss the “why” behind behavior, flattening rich experiences into numbers that say little.

When Thinking Too Much Makes Answers Worse

The more mental effort a survey demands, the less honest people become. Each question forces small decisions: “What does this scale mean?”, “Where do I fit?”, “What’s closest to what I feel?” That adds cognitive load, the mental strain of processing too much at once.

Research shows that when working memory gets overloaded, quality drops. In surveys, that looks like shorter answers, skipped questions, or middle-of-the-scale responses that feel “safe.” People stop reflecting and start guessing.

So even before someone quits the survey, their answers might already be flattening out.

Why Longer Surveys Lose More People

It’s easy to think one more question won’t hurt, but it does. Every added item slightly raises the effort needed to stay engaged. The effect stacks: five questions are fine, ten are tiring, fifteen feel endless.

Completion rates drop exponentially as forms grow longer. Poor mobile layouts or unclear wording make it worse. What starts as a quick ask turns into a mental chore.

Once that happens, people stop caring about accuracy. They just want out. That’s the point where even your best-designed survey turns into noise.

3. Customers Don’t Feel Heard with Surveys

All this effort would still be worth it if people saw a result. But most don’t. At some point, “We value your feedback” stopped sounding sincere and started sounding like a copy. Customers fill out a form, see no change, and conclude their words vanish into a void.

A Gartner study found that only 16% of customers believe their feedback leads to real action. That lack of visible response creates a trust gap, the moment people stop believing their voice matters.

So you end up with a double gap:

- Perception gap — customers don’t believe their feedback drives change.

- Communication gap — companies collect data but don’t show that they listened.

Once that trust gap opens, response rates suffer for reasons that have nothing to do with timing or incentives. Customers aren’t only tired of surveys; they’re tired of talking into what feels like a void.

To repair that, the feedback experience has to move away from extraction and toward conversation, a format where people feel heard in real time, see acknowledgment, and can share the story behind their score, not just the score itself.

How You Can Fix All That with Chat

When feedback feels like a text chat, not a form, participants shift gears; it’s lighter, more natural, and adapts to their pace and story.

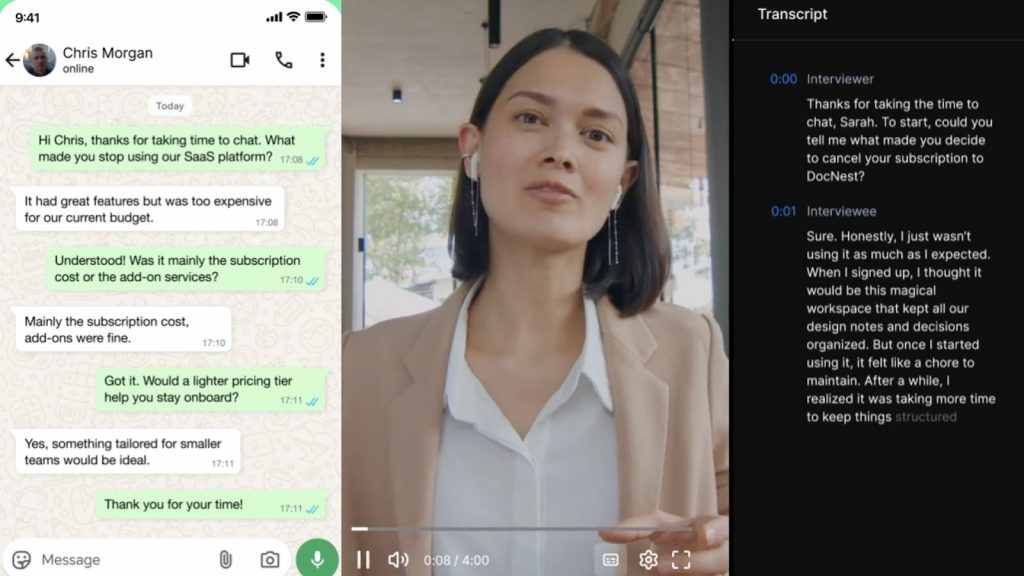

Chat-based feedback mirrors how people already communicate: casual, turn-based, and contextual. And it also taps into a behavioral truth we’re seeing everywhere: people talk to AI more openly than they do in structured forms.

Because the cognitive barrier is lower (less scanning, fewer rigid grids), chat formats tend to yield richer, longer, and more candid responses. In one study, 88% of participants said chat-surveys were “much more” or “somewhat more” enjoyable compared to traditional online surveys. Conversational surveys via chatbots produce significantly higher quality data in terms of informativeness, relevance, and length of open text replies compared to standard web surveys.

Here are the core advantages:

- Lower friction: chat interrupts less, uses familiar UI (message bubbles), and fits mobile and spontaneous moments.

- Adaptive follow-ups: instead of a fixed list, chat can ask “Why did you choose that?” or “Tell me what you mean by slow,” uncovering root causes.

- Deeper narratives: participants feel heard and describe real-world experiences.

- Higher engagement: better enjoyment correlates with longer, thoughtful responses instead of quick, superficial ticks.

The bridge is clear: while conversation works for humanizing feedback, the next challenge is scaling without moderator bias. Chat formats let you replicate the conversational style at scale, ensuring every participant feels like part of a two-way dialogue rather than just filling a form.

AI Interviewers at Scale and How It Works

Once you move feedback into chat, the next step is obvious: you don’t want one human moderator running 50 conversations at once. That’s where AI-powered conversations come in; they take the good parts of a live interview (follow-ups, nuance, tone) and combine them with the scale and consistency of software.

What AI Interviewers Actually Do

An AI interviewer is essentially a guided, multi-turn chat flow that:

- asks questions in a conversational way

- adapts follow-ups based on what the person just said

- runs asynchronously (people can answer when it suits them)

- keeps a neutral, non-judgmental tone every single time

Instead of pushing everyone through the same rigid form, the AI reacts: if someone says, “I almost churned at checkout,” it can ask, “What happened at that point?” If they mention “price,” it can probe on willingness to pay, alternatives, or perceived value, all without a researcher having to be in the room.

Scale, Consistency, and Synthesis

With traditional interviews, three things are hard:

- Scale – you can only run so many per week.

- Consistency – moderators drift, paraphrase, or lead unintentionally.

- Analysis – hours of transcripts to mine for patterns and quotes.

Platforms like Prelaunch AI Interviewer are built specifically for this kind of work. It flips that:

- It runs chat-native interviews in parallel via WhatsApp, where people already talk, across time zones.

- Supports 30+ languages, so you can reach customers in their own words, not just in English.

- It asks questions the same way, in the same order logic, avoiding moderator drift and reducing human bias.

- It automatically structures outcomes within hours: tagging themes, clustering similar answers, and surfacing representative quotes, so you’re not manually combing through raw text.

These systems can generate longer and more informative open-ended responses than standard web surveys, while also improving perceived experience for participants (higher enjoyment, lower boredom, and greater willingness to continue). That’s a strong combination if you care about both data quality and scale.

Conclusion

Your customers aren’t any less opinionated than they were five years ago. They’ve just learned that long forms, generic scales, and quick surveys rarely lead to anything meaningful for them. That’s what sits underneath survey fatigue: too many asks, too little listening, and a format that makes it hard to say what really happened.

AI chat interviewers don’t magically make people care more; they just stop getting in the way. A lightweight, back-and-forth conversation lowers the effort, brings back context and emotion, and gives people room to explain the “almost churned at checkout” moment instead of compressing it into a 7/10. When that conversation is powered by an AI interviewer, you get all of that plus scale, consistency, and structured insight you can actually act on.

If you’re ready to move from checkbox feedback to real customer truth, you can join the waitlist for Prelaunch AI Interviewer and start testing chat-native interviews for your own product: