Every great product starts with a conversation. But not every team has time to run one properly. Customer interviews are still the most powerful way to understand why people choose, switch, or ignore your product. Yet most teams admit they don’t do enough of them. Why? Because in-person interviews take time: scheduling, note-taking, transcribing, and analyzing.

AI-moderated interviews are changing that. They automate the heavy lifting, running dozens of conversations in parallel, keeping tone and timing consistent, and summarizing findings within hours. The result isn’t fewer human insights, but faster, cleaner ones.

In this article, we’ll compare AI-moderated and human-moderated interviews side by side, we’ll see what each does best, where they fall short, and how teams can choose the right approach to get deeper, more reliable customer understanding.

Quick Comparison: AI vs. Human Moderators

| Dimension | Human Moderator | AI-Moderated Interview |

| Empathy & Emotion | Builds rapport; reads subtext well | Neutral, non-judgmental; encourages candid answers |

| Speed & Scale | Sequential, capacity-limited | Parallel, 24/7; insights in hours |

| Consistency | Varies by interviewer; prone to drift | Identical phrasing/pacing; easy to compare and audit |

| Cost & Resources | Higher setup and labor costs | Lower marginal cost; feasible for small teams |

| Best For | Sensitive, exploratory, exec-visible sessions | Validation, trend scans, frequent sentiment checks, large N (number of observations) |

Human-Moderated Interviews

The Human Advantage

Human moderators bring what no algorithm can: empathy and emotional intelligence. They can notice hesitation, tone changes, or a flicker of discomfort like signals that reveal what participants won’t always verbalize. Skilled moderators turn those subtle cues into discovery moments, guiding conversations toward honesty and context. This is why human-moderated interviews remain the gold standard for uncovering deep motivations, cultural nuance, and lived experiences.

There are also situations where having a person in the (virtual) room changes the outcome entirely. Early-stage discovery, brand and positioning work, or stakeholder-facing sessions often depend on nuance, tone, and relationship-building as much as on the questions themselves. In these contexts, the moderator is constantly “reading the room,” rephrasing in real time, and choosing where to lean in or back off.

Humans excel at creating safe spaces, especially for emotionally charged or sensitive topics like money, health, or identity. They listen between the lines, reflect emotions back, and help participants put fuzzy experiences into words. When trust, story, and perception are the main drivers of insight, human moderation is not just helpful; it’s essential.

Where Humans Struggle

But depth has its limits. Every session requires presence, scheduling, transcription, and interpretation. According to a JMIR AI comparative study, human-moderated interview workflows take two to three times longer to complete than semi-automated alternatives, often delaying insight delivery by weeks.

Another report on qualitative research adds that rising costs and researcher fatigue have become major barriers to running continuous interview programs. Simply put, human moderators don’t scale easily. Each additional session requires more coordination, budget, and analysis time.

The Bias Problem

Even the best moderators bring themselves into the room. Their tone, phrasing, or even facial expressions shape how participants respond. This phenomenon, often called moderator drift, can make data harder to compare across sessions. Variation in delivery is one of the most common causes of qualitative data inconsistency.

That doesn’t mean humans are unreliable; it means they’re human. Their greatest strength (empathy) is also their biggest variable.

AI-Moderated Interviews

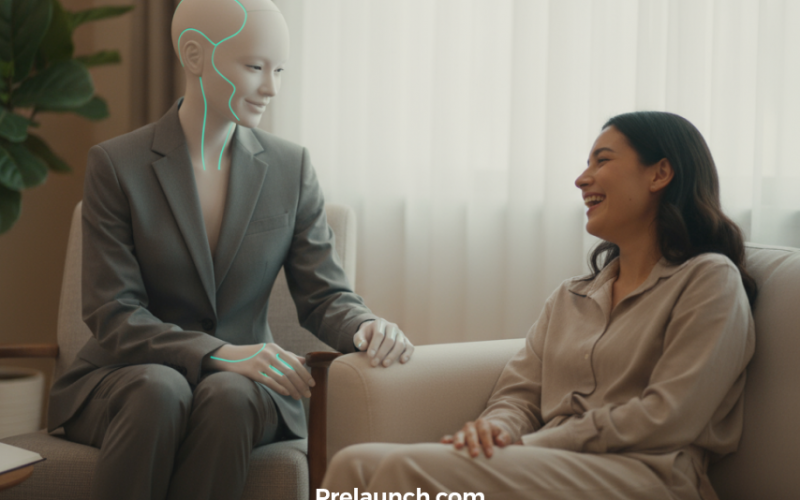

How AI Interviews Work

AI-moderated interviews remove the limits of human scheduling. Instead of arranging dozens of one-on-one calls, teams can now run hundreds of structured conversations simultaneously, day or night. The system recruits, moderates, records, transcribes, and tags responses automatically.

Platforms like Prelaunch AI Interviewer (and other similar AI interviewers, such as Listen Labs, Strella, and Qualz) make this workflow seamless. They manage setup, execution, and synthesis, allowing researchers to spend their time understanding meaning, not managing logistics.

According to NewtonX (2025), AI-moderated studies can run up to 70% faster and at 60% lower cost than traditional moderated formats. That speed means insights can feed directly into design, marketing, or feature planning without delay, transforming customer research into a continuous feedback loop.

Consistency Without Human Variation

Every participant in an AI-moderated interview experiences the same tone, pacing, and underlying branching logic for follow-up questions. The AI interviewer adapts its probes based on each answer, but it does so using a shared, repeatable set of rules, so every respondent is guided toward similarly deep insight. This consistency eliminates the “moderator drift” that often occurs when human researchers unintentionally shape responses through tone or phrasing.

Because the AI moderator operates without emotional or cognitive bias, every participant experiences a consistent moderation quality like calm tone, steady pacing, and equal attentiveness regardless of who they are or when they join.

While the specific questions adapt dynamically to each participant’s answers, the AI’s neutral delivery makes the collected insights easier to segment, categorize, and compare across personas or themes. Teams can spot trends faster and draw stronger conclusions, knowing every respondent faced the same context.

Participants also tend to complete AI-moderated sessions more willingly. Studies also show 10–15% higher completion and comfort rates compared to human-led formats, largely because respondents feel less judged and more free to answer honestly.

Where AI Still Has Gaps

Even the best AI systems can’t yet interpret emotion or subtext the way humans do. They may miss irony, cultural nuance, or the emotional hesitation behind a response. Without a well-crafted guide and ethical oversight, the experience can feel overly mechanical or shallow.

That’s why AI works best in structured scenarios like validation studies, trend tracking, sentiment monitoring, or large-sample interviews where consistency and speed matter more than emotional depth.

Choosing Between Human and AI Interviews

Start with a quick scan of five levers: goal, sensitivity, sample size, timeline, and stakeholder risk.

If you’re exploring a fuzzy problem or telling a story that lives in tone and context, a person in the room changes the conversation. If you’re looking to collect deep, structured insights across many segments in a tight sprint, precision and repeatability beat charm.

Modern tools make switching between human and AI interviews seamless. You can run interviews automatically, get full transcripts and synthesized insights, and even trigger new interviews when metrics (like churn) spike. This keeps learning continuous without slowing down the team.

Choose Human when

- The problem space is new/ambiguous, and you need a rich narrative context

- Topics are sensitive (money, health, identity), and trust is the insight

- Sessions are executive-visible, and tone/perception matter

- You expect messy, branching conversations that benefit from improvisation

Choose AI when

- You need rich, qualitative insights across personas/markets at scale, without running dozens of live sessions yourself

- You’re running continuous check-ins (sentiment, churn drivers, feature adoption)

- Strict consistency, auditability, and quick synthesis matter

- You’re validating what small-N discovery already surfaced

Whichever path you take, the craft still decides quality: write a tight guide, pretest for wording/order bias, set clear data-handling rules, and be transparent about how the interview runs (including AI use). Let humans carry the moments where empathy changes the outcome; let AI carry the load where speed and comparability change the value.

Conclusion

Humans hear the meaning between the lines; AI keeps the pace and scale. That’s the core trade-off. If the goal is empathy, context, and story, a human moderator still leads. If the goal is consistency, coverage, and speed, AI turns interviews into a reliable signal you can use this week. In other words, “better” is context-fit: pick the moderator that unlocks clarity fastest for the decision you need to make.

What’s next is less about choosing sides and more about building a rhythm. Interviews are becoming continuous, data-rich, and operational, plugged into analytics, triggered by churn, and summarized in hours. Let AI handle the first pass and bring humans in where depth, trust, and interpretation truly matter.

If your next sprint needs faster, cleaner signals, start there: AI first, human where it counts.

Ready to try it at scale? Join the early-access waitlist for Prelaunch AI Interviewer, get in early, and we’ll add bonus interview credits at launch.